MAELSTROM dissemination workshop (28 March) and Machine Learning Workshop (29 March - 1 April)

Abstracts

Improving the radiative scheme with machine learning on an heterogeneous cluster

1Atos

The radiative scheme is one of the most time consuming processing solver when running the IFS. For computational reasons it is run with a coarser grid than the IFS, both in time and space. Surrogating the radiative scheme with a machine learning model could reduce the processing time and thus enable the IFS to access this updated information on a more regular basis, increasing the accuracy of the overall results. Currently, clusters are not all equipped with GPU nodes leading to heterogeneous clusters and probable bottlenecks to access AI-accelerated nodes. The use of MPI RMA (Remote Memory Access) enables the creation of communication between CPU and GPU nodes in a cluster and tackles the issue of heterogeneity. This talk discusses the work in progress on the radiation scheme on both the creation of the surrogate model and the connection through MPI RMA to accelerate and improve the computation on an heterogeneous cluster.

A web tool for automating fish age reading using deep learning

1Hellenic Centre for Marine Research

Fish age-reading from otolith images is a key information in achieving sustainable exploitation of fisheries resources. However, extracting age information from otolith images requires a considerable effort by experienced readers. This suggests a need for cost-effective approaches that could facilitate a more streamlined analysis. Here, we present DeepOtolith, an automatic web-based system for estimating fish age by combining otolith images with deep learning. DeepOtolith receives as input otolith images from a specific fish species and predicts fish age. DeepOtolith is based on convolutional neural networks (CNNs), a class of deep neural networks efficient for analysing images to resolve computer vision tasks. DeepOtolith currently contains three case studies species, but it is scalable to include more species from interested researchers. DeepOtolith is accessible at the following URL address: http://otoliths.ath.hcmr.gr/.

A critical view on the suitability of Machine Learning techniques to downscale climate change projections.

1AEMET

Machine Learning is a growing field of research with many applications. It provides a series of techniques able to solve complex nonlinear problems, and that has promoted their application for statistical downscaling. Intercomparison exercises with other classical methods have so far shown promising results. Nevertheless, many evaluation studies of statistical downscaling methods neglect the analysis of their extrapolation capability. In this study we aim to make a wake up call to the community about the potential risks of using machine learning for statistical downscaling of climate change projections. We present a set of three toy experiments, applying three commonly used machine learning algorithms, two different implementations of Artificial Neural Networks and a Support Vector Machine, to downscale daily maximum temperature, and comparing them with the classical Multiple Linear Regression. We have tested the four methods in and out of their calibration range, and have found how the three machine learning techniques can perform poorly under extrapolation. Additionally, we have analysed the impact of this extrapolation issue depending on the degree of overlapping between the training and testing datasets, and we have found very different sensitivities for each method and specific implementation.

Hybrid Physics-AI Approach for Cloud Cover Nowcasting

Recent works using AI have explored cloud cover nowcasting, showing that a future sequence of satellite images can be predicted from a sequence of past observed images, but with some defects: it is not always possible to transport structures that are abnormally attenuated. Moreover, using numerical resources (data, computation) to learn physical processes that are already known, e.g. the advection, to the detriment of more complex processes, appears to be a waste of resources. To tackle these issues, we propose a novel hybridization between physics and AI, where part of the known physical equations is mixed with state-of-the-art Deep Learning techniques. In this work we designed a hybrid physics-AI model that enforces a physical behaviour, multi-level transport dynamics, as a hard constraint for a trained U-Net architecture. The results show qualitative improvements of the hybrid formulation compared to a U-Net without physics constraints, yet without the large overhead of recent generative techniques.

High Spatio-temporal wind forecasting with focus on wind energy using a combined statistical – machine learning approach

1ZAMG

On- and off-shore wind energy production in Europe increased in the past years and will continue rising in the next years serving the ambitious goal of providing fossil fuel free energy. To achieve this existing, older locations are adapted and equipped with newer wind turbines and new locations are explored and investigated. In Austria, with the rather flat eastern part, hilly northern and southern part, and the mountainous regions, suitable space is sparse. Often, suitable mountainous regions are located close to existing tourism infrastructure (skiing resorts) or in regions with very limited infrastructure and, thus, hard to explore.

However, when exploring and building wind energy production sites in mountainous regions numerical weather prediction (NWP) models often are to coarse, even the convection permitting models are rather coarse compared to the size of a wind farm (or skiing resort) in complex terrain. Post-processing using statistical and / or machine learning models can improve, to a certain extent, the NWP forecasts when combining with either high resolved targed fields, e.g., reanalysis and analysis fields, or with spatially interpolated observations.

In this study we demonstrate the usage of a combination of a neural network and ensemble model output statistics (EMOS, SAMOS) for post-processing NWP forecasts for wind speed to a spatial resolution of 1 x 1 km for Austria. Different input NWP models are combined with static fields such as topography and slope to add embedded knowledge on the surroundings to the model. For a selected subregion a test study using 100 x 100 m resolution will be presented.

Learning the statistics of an ensemble forecast using neural networks

1University Bremen, 2Memorial University of Newfoundland

Ensemble prediction systems are an invaluable tool for weather prediction. Practically, ensemble predictions are obtained by running several perturbed numerical simulations. However, these systems are associated with a high computational cost and often involve statistical post-processing steps to improve their qualities.

Here we propose to use a deep-learning-based algorithm to learn the statistical properties of a given ensemble prediction system, such that this system will not be needed to simulate future ensemble forecasts. This way, the high computational costs of the ensemble prediction system can be avoided while still obtaining the statistical properties from a single deterministic forecast. We show preliminary results.

Climate-Invariant, Causally Consistent Neural Networks as Robust Emulators of Subgrid Processes across Climates

1University of Lausanne, 2Institut für Physik der Atmosphäre, German Aerospace Center (DLR), Oberpfaffenhofen, Germany, 3Department of Earth System Science, University of California, Irvine, USA, 4Institute of Data Science, German Aerospace Center (DLR), Jena, Germany, 5Department of Earth and Environmental Engineering, Columbia University, New York, USA

Data-driven algorithms, in particular neural networks, can emulate the effects of unresolved processes in coarse-resolution Earth system models (ESMs) if trained on high-resolution simulation or observational data. However, they can (1) make large generalization errors when evaluated in conditions they were not trained on; and (2) trigger instabilities when coupled back to ESMs. First, we propose to physically rescale the inputs and outputs of neural networks to help them generalize to unseen climates. Applied to the offline parameterization of subgrid-scale thermodynamics (convection and radiation) in three distinct climate models, we show that rescaled or "climate-invariant" neural networks make accurate predictions in test climates that are 4K and 8K warmer than their training climates. Second, we propose to eliminate spurious causal relations between inputs and outputs by using a recently developed causal discovery framework (PCMCI). For each output, we run PCMCI on the inputs time series to identify the reduced set of inputs that have the strongest causal relationship with the output. Preliminary results show that we can reach similar levels of accuracy by training one neural network per output with the reduced set of inputs; stability implications when coupled back to the ESM are also explored. Overall, our results suggest that explicitly incorporating physical knowledge into data-driven models of Earth system processes may improve their ability to generalize across climate regimes, whereas quantifying causal associations to select the optimal set of inputs may improve their consistency and stability.

How AI/ML interpenetrate into Weather Forecast: NN emulator for radiation parameterization and Retrieval similar weather condition using satellite images

1Director of AI Weather Forecast Research Division, 2Division of AI Weather Forecast Research, NIMS, KMA

KMA/NIMS has run 10yr-AlphaWeather project to develop and apply the intelligent solution to Weather Forecast, which is firstly focused on improvement of precipitation forecast. AlphaWeather project consists of 7 sub-projects. AlphaWeather project will be introduced briefly, and then recent progress associated with NWP and feature detection will be presented. One is for neural network (NN) emulator for radiation parameterization and its application on KMA operational NWP model (Song, Roh and Lee) and the other is for similar weather condition retrieval algorithm using 30 years of geostationary satellite image (Lee, Shin, Hong and Park).

The NN emulator for radiation parameterization was developed to reduce the computational cost while replicating the accuracy of control run and resulted in a 60-fold speedup for the radiation process itself and a decrease of 87.26% in total computational time of NWP (Song and Roh, 2021). In the recent study, the multi-model ensemble approach was considered to secure the long-term stability of operational NWP model when the NN emulator is applied.

Several technique have been tried to specify similarity of weather condition from the past weather data. Here we use NN-Descent, a simple algorithm for approximate KNN based on local search. Firstly, we adopted CAE(Convolutional AutoEncoder) to generate robust feature vector and we generated 128/256 dimensional vector using COMS east-Asia images with 5 channels. The preliminary results of NNDescent and how to apply the algorithm to Weather Forecast will be presented.

ClimateBench: A benchmark dataset for data-driven climate projections

1University of Oxford, 2North Carolina Institute for Climate Studies, 3Norwegian Meteorological Institute, 4UEA, 5UCL

Many different emissions pathways exist that are compatible with the Paris climate agreement, and many more are possible that miss that target. While some of the most complex Earth System Models have simulated a small selection of Shared Socioeconomic Pathways it is impractical to use these expensive models to fully explore the space of possibilities. Such explorations therefore mostly rely on one-dimensional impulse response models, or simple pattern scaling approaches to approximate the physical climate response to a given scenario. Here we present ClimateBench - a benchmark dataset based on a suite of CMIP, AerChemMIP and DAMIP simulations performed by NorESM2, and a set of baseline machine learning models that emulate its response to a variety of forcers. These surrogate models can predict annual mean global distributions of temperature, diurnal temperature range and precipitation (including extreme precipitation) given a wide range of emissions and concentrations of carbon dioxide, methane and aerosols. We discuss the accuracy and interpretability of these emulators and consider their robustness to physical constraints such as total energy conservation. Future opportunities incorporating such physical constraints directly in the machine learning models and using the emulators for detection and attribution studies are also discussed. This opens a wide range of opportunities to improve prediction, consistency and mathematical tractability. We hope that by laying out the principles of climate model emulation with clear examples and metrics we encourage engagement from statisticians and machine learning specialists keen to tackle this important and demanding challenge.

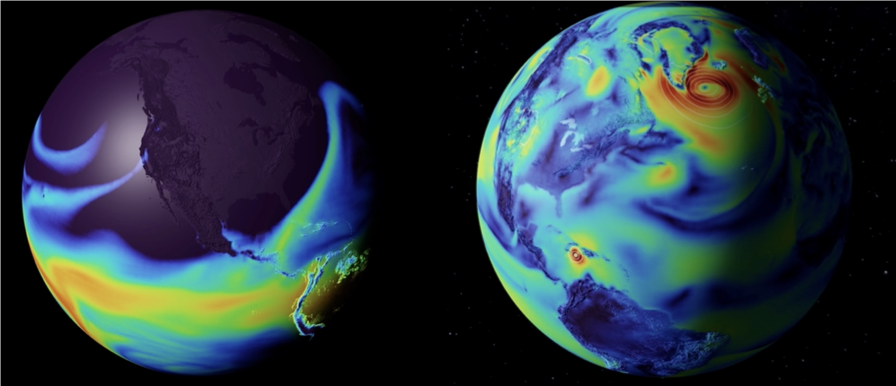

Why and how to learn end-to-end subgrid closures for atmosphere and ocean models?

1IGE, UGA/CNRS/IRD/G-INP, 2LEGI, UGA/CNRS/G-INP, 3IMT Atlantique, 4IPSL

Leveraging machine learning (ML) for designing subgrid closures for geoscientific models is becoming more and more common. State-of-the-art strategies approach the problem as a supervised learning task which trains a machine to predict a subgrid flux based on information available at coarse grain. In practice, training data are generated from high resolution model data coarse-grained to mimic the fields available in a lower resolution model. By essence, these strategies tend to optimize the design of subgrid closures on the basis of so-called a priori criteria. But the actual purpose of subgrid closures is to perform well in terms of a posteriori metrics that can only be computed after model integration. In this presentation, we will show that strategies based on a priori learning criteria may fail and converge towards closures that tend to be unstable in direct simulations. We will then show that subgrid closures can be directly trained in order to optimize a posteriori criteria. We will illustrate both strategies on two-dimensional turbulent flows, and show that end-to-end strategies yield closures which outperform all known empirical and data-driven closures in terms of performance, stability and ability to generalize to different flow configurations. Still, as the computation of the training loss involves integrating the coarse resolution model, our strategy is only amenable if the coarse resolution solver is fully differentiable so that it allows gradient back-propagation. We will argue that deep emulators of existing non-differentiable code bases may allow the deployment of end-to-end strategies for learning new components of legacy models. We will conclude this presentation by discussing the challenges of integrating ML-based closures into existing legacy atmosphere and ocean models.

Developing an emulator of an Ocean GCM

1University of Cambridge, 2British Antarctic Survey

The recent boom in machine learning and data science has led to a number of new opportunities in the environmental sciences. In particular, process-based weather and climate general circulation models (GCMs) represent the best tools we have to predict, understand and potentially mitigate the impacts of climate change and extreme weather. However these models are incredibly complex and require huge amounts of High Performance Computing resources. Machine learning offers opportunities to greatly improve the computational efficiency of these models by developing data-driven emulators.

Here I discuss recent work to develop a data-driven emulator of an ocean GCM. Much recent progress has been made with developing data-driven forecast systems of atmospheric weather, highlighting the promise of these systems. However, despite being a key component of the weather and climate system, there has been less focus on applying these techniques to the ocean. Here, the emulator approach - that of learning to mimic a General Circulation Model, rather than learning directly from observations - is critical, given the limitations in observing the ocean across suitable temporal and spatial scales.

We train a neural network on the output from a GCM of an idealised channel configuration of oceanic flow. We show the model is able to learn well the complex dynamics of the system, replicating the mean flow and details within the flow over single prediction steps. We also see that when iterating the model, predictions remain stable, and continue to match the ‘truth’ over a short-term forecast period, here around a week.

An Online-Learned Neural Network Chemical Solver for Stable Long-Term Global Simulations of Atmospheric Chemistry

1Harvard University

A major computational barrier in global modeling of atmospheric chemistry is the numerical integration of the coupled kinetic equations describing the chemical mechanism. Machine-learned (ML) solvers can offer order-of-magnitude speedup relative to conventional implicit solvers, but past implementations have suffered from fast error growth and only run for short simulation times (<1 month). A successful ML solver for global models must avoid error growth over year-long simulations and allow for re-initialization of the chemical trajectory by transport at every time step. Here we explore the capability of a neural network solver equipped with an autoencoder to achieve stable full-year simulations of tropospheric oxidant chemistry in the global 3-D GEOS-Chem model, replacing its standard mechanism (228 species) by the Super-Fast mechanism (12 species) to avoid the curse of dimensionality. We find that online training of the ML solver within GEOS-Chem is essential for accuracy, whereas offline training from archived GEOS-Chem inputs/outputs produces large errors. After online training we achieve stable 1-year simulations with five-fold speedup compared to the standard implicit Rosenbrock solver with global tropospheric normalized mean biases of -0.3% for ozone, 1% for hydrogen oxide radicals, and -5% for nitrogen oxides. The ML solver captures the diurnal and synoptic variability of surface ozone at polluted and clean sites. There are however large regional biases for ozone and NOx under remote conditions where chemical aging leads to error accumulation. These regional biases remain a major limitation for practical application, and ML emulation would be more difficult in a more complex mechanism.

Neural-network parameterization of subgrid momentum transport learned from a high-resolution simulation

1MIT

Attempts to use machine learning for developing new parameterizations have mainly focused on parameterizing the effects of subgrid processes on thermodynamic and moisture variables, but the effects of subgrid processes on momentum variables are also important in simulations of the atmospheric circulation. In this study we use neural networks to develop a parameterization of subgrid momentum transport that learns from coarse-grained output of a high-resolution atmospheric simulation in an idealized aquaplanet domain. Substantial subgrid momentum transport is found to occur due to convection and non-orographic gravity waves.

The neural network parameterization we develop has a structure that ensures the conservation of momentum, and it has reasonable skill in predicting momentum fluxes associated with convection. However, the neural network is less accurate for momentum than for energy and moisture, possibly due to the difficulty in predicting momentum fluxes associated with non-orographic gravity waves, and the difficulty in predicting the sign of momentum fluxes associated with convection. When the neural-network parameterization is implemented in an atmospheric model at coarse resolution it leads to stable simulations, and some characteristics of the atmospheric circulation improve, while some characteristics are sensitive to the exact model configuration.

A single-column approach might not be ideal for the momentum parameterization problem since certain atmospheric phenomena, such as organized convective systems, can cross multiple grid boxes and have slantwise circulations. To investigate how the accuracy of the neural-network momentum parameterization can be improved, we train neural networks using non-local inputs spanning over 3×3 columns of inputs. We find that including the non-local inputs substantially improves the prediction of subgrid momentum transport compared to a single-column formulation.

Overall, our results highlight the difficulty in accurately parameterizing subgrid momentum transport, but they also show that neural-networks parameterizations have the potential to improve the representation of subgrid momentum transport in models of the atmosphere.

Machine learning for gravity waves in a climate model

1Met Office, 2Met Office Informatics Lab, 3Met Office Hadley Centre

Gravity wave effects are essential for correctly simulating the climatology and variability in climate models. They are too small to simulate directly, and so need to be parametrised, introducing simplifications and potential errors into the model. The UK Met Office is involved in a project funded by the Virtual Earth System Research Institute to improve gravity wave parametrisation by using machine learning algorithms. For emulating the non-orographic gravity wave (NOGWD) scheme currently used in the Met Office climate model, investigation has shown a convolutional neural network to outperform other machine learning algorithms, and found two years of training data to be sufficient. By coupling the trained neural network to a simple one-dimensional mechanistic model, we have reproduced the quasi-biennial oscillation simulated in the tropical stratosphere of climate models. The current NOGWD scheme uses multiple input fields, but the neural network performs well with just two (wind and buoyancy frequency) offering potential improvements in model performance. Future aims are to couple the neural network to the Met Office climate model, and to use high-resolution model data as input to the network with the hope of improving the atmospheric forcing produced by the current NOGWD parametrisation scheme. Any subsequent improvements in the quasi-biennial oscillation in the tropical stratosphere, or sudden warmings in the extratropical winter stratosphere, offer the potential to improve simulation of the surface in climate models. In addition, emulation of the existing orographic gravity wave (OGW) scheme is planned.

Physics-Informed Learning of Aerosol Microphysics

Aerosol particles play an important role in the climate system by absorbing and scattering radiation and influencing cloud properties. They are also one of the biggest sources of uncertainty for climate modeling. Many climate models do not include aerosols in sufficient detail due to computational constraints. In order to represent key processes, aerosol microphysical properties and processes have to be accounted for. This is done in the ECHAM-HAM global climate aerosol model using the M7 microphysics, but high computational costs make it very expensive to run at higher resolutions or for a longer time. We aim to use machine learning to emulate the microphysics model at sufficient accuracy and reduce the computational cost by being fast at inference time. The original M7 model is used to generate data of input-output pairs to train a neural network on it. We are able to learn the variables' tendencies achieving an average R² score of 98.4% on a logarithmic scale and 77.1% in original units. We further explore methods to inform and constrain the neural network with physical knowledge to reduce mass violation and enforce mass positivity. On a GPU we achieve a speed-up of up to over 64x compared to the original model.

Neural network emulation of precipitation and condensation processes in FV3GFS

1Allen AI

Neural networks can accelerate traditional weather and climate models by emulating certain expensive subroutines or code blocks. However, emulation is also the clearest supervised learning problem our discipline offers, and is a great proving ground for learning parameterizations from high resolution simulations or observations. So far, most successful emulators have focused on the radiation scheme since it is very expensive. However, since radiation may be especially easy to emulate because it only interacts with the dynamics on long timescales. In this talk, I present our efforts to emulate cloud microphysics and latent heating. Unlike radiation, latent heating is tightly coupled to the dynamical core on timescales of seconds. Because of this tight coupling, models which are >95% accurate on offline test data can and do lead to online crashes. As such, microphysics emulation an benchmark problem for developing moisture-coupled ML parameterizations.

Towards physics-based machine learning for land surface modeling: The case of land-atmosphere interactions

1University of Washington

While machine-learning has been able to produce very good predictions in standalone applications in the Earth sciences it is less clear how a machine-learned model of a single process might be coupled to the wider system of processes that occur in nature. Particularly, this can be an issue when the process representation in a model does not match that which is observed, making it difficult to reconcile different modeling approaches.

In this presentation we build on previous work on embedding neural networks trained to produce turbulent heat fluxes into a process-based hydrologic model (PBHM). Previously we found this system to produce better predictions of latent and sensible heat fluxes, while maintaining a full representation of the water cycle. However, to embed the neural networks into the PBHM we had to make some limiting assumptions about how the hydrology would be affected by these heat fluxes. To address this we build on previous work that showed how encoding key portions process-representations into the neural network can have desirable properties. Specifically we expand our neural networks to include a physics-based layer which partitions the latent and sensible heat fluxes among the vegetation and soil domains, each with their own resistance terms. We thus effectively provide the ML model with both physics-informed inputs as well as maintain constraints such as mass and energy balance on outputs of the coupled ML-PBHM simulations.

We show that this framework not only maintains predictive capabilities of the standalone neural network, but also how given the process-representation directly, the neural network is able to learn ecohydrologically relevant interactions that allow us to learn more complex process representations than we have from observations.

High-Resolution Solar Power Nowcasting by Deep Learning: How to Extract Features from Historic Time Series, Remote Sensing, and Numeric Weather Prediction Models to achieve Optimized Machine Learning Forecasts

1ZAMG

With the rapidly increasing use of solar power, accurate predictions of the site-specific power production are needed to ensure grid stability, energy trading, (re)scheduling of maintenance, and energy transmission.To tackle the need of such location-optimized and highly resolved forecasts, a deep learning framework is adopted to forecast the highly variable diurnal and seasonal expected power production.

The deep-learning methods include an LSTM (long short-term memory; type of an artificial neural network/ANN) and random forest, and learn from the combination of multiple heterogeneous data sources. Among the relevant model inputs, i.e., ANN input features, extracted are 3D-fields from delayed numerical weather prediction models, satellite data and satellite data products, point-interpolated radiation time series from remote sensing, climatological values, and observation time-series. We focus on the proper feature selection and combination of these data sources, and investigate how to prepare and transform the inputs for an optimized training data set in studied test cases. Particularly, we aim at providing up to six hours ahead nowcasts at several Central European solar power farms with a sub-hourly update frequency.

Results obtained by the developed method generally yield high forecast-skills, where different combinations of inputs and processing-steps are shown in our analysis. We compare the forecast results obtained through this machine learning framework to available forecast methods, e.g., forecasts generated with python pvlib driven with AROME or AROME RUC.

Improving sub-seasonal forecasts by correcting missing teleconnections using ANN-based post-processing

1KNMI, 2Vrije Universiteit, 3Deltares

Sub-seasonal forecasts are challenging for numerical weather prediction (NWP) and machine learning models alike. Predicting temperature with a lead-time of two or more weeks requires a forward model to integrate multiple complex interactions, like oceanic and land surface conditions that might lead to recurrent or persistent weather patterns. The representation of the relevant interactions is imperfect in NWP models, just as our physical understanding of them. Model predictability can therefore deviate from real predictability for poorly understood reasons, hindering future progress.

This paper combines NWP with machine learning to detect and resolve such imperfect representations. We post-process ECMWF extended range forecasts of high summer temperatures in Europe with a shallow artificial neural network (ANN). Predictors are objectively selected from a large set of atmospheric, oceanic and terrestrial sources of predictability from ERA-5 and ECMWF re-forecast output. In the proposed architecture, the ANN learns to ‘update’ a prior ECMWF-given probability of two-meter temperature exceeding a given threshold. Due to the architecture of the network the magnitude of each correction, like increasing underestimated probabilities, can be attributed to specific predictors at initialization- or forecast-time. We interpret the circumstances in which substantial corrections are made with two explainable-AI tools: KernelSHAP and Input times gradient. These reveal that a tropical west Pacific sea surface temperature pattern is connected to high monthly average European temperature at a two-week lead-time. This teleconnection pattern is underestimated by the dynamical model and by correcting for this bias the ANN-based post-processing can thus improve forecast skill. We further find that the method does not readily increase skill when applied to other combinations of lead-time, averaging period and threshold, possibly due to non-stationarity in the data, lack of real predictability or lack of a re-forecast set of sufficient length.

Interpretable Deep Learning for Probabilistic MJO Prediction

1University of Oxford

The Madden–Julian Oscillation (MJO) is the dominant source of sub-seasonal variability in the tropics. It consists of an Eastward moving region of enhanced convective storms coupled to changes in zonal winds at the surface and aloft. The chaotic nature of the Earth System means that it is not possible to predict the precise evolution of the MJO beyond a few days, so subseasonal forecasts must be probabilistic. The forecast probability distribution should vary from day to day, depending on the instantaneous predictability of the MJO. Operational dynamical subseasonal forecasting models do not have this important property. Here we show that a statistical model trained using deep-learning can produce skilful state-dependent probabilistic forecasts of the MJO. The statistical model explicitly accounts for intrinsic chaotic uncertainty by predicting the standard deviation about the mean, and model uncertainty using a Monte-Carlo dropout approach. Interpretation of the mean forecasts from the statistical model highlights known MJO mechanisms, providing confidence in the model. Interpretation of the predicted uncertainty identifies new physical mechanisms governing MJO predictability. In particular, we find the background state of the Walker Circulation is key for MJO propagation, and not underlying Sea Surface Temperatures as previously assumed.

Convolutional neural networks for skillful global probabilistic prediction of temperature and precipitation on sub-seasonal time-scales

1Karlsruhe Institute of Technology, Department of Economics, Institute of Econometrics and Statistics

Reliable sub-seasonal forecasts for precipitation and temperature are crucial to many sectors including agriculture, public health and renewable energy production. Since the forecast skill of numerical weather forecasts for lead times beyond two weeks is limited, the World Meteorological Organization launched a Challenge to improve Sub-seasonal to Seasonal Predictions using Artificial Intelligence, which was held from June to October 2021. Within the framework of this challenge, we have developed a hybrid forecasting model based on a convolutional neural network (CNN) that combines post-processing ideas with meteorological process understanding to improve sub-seasonal forecasts from the European Centre for Medium-Range Weather Forecasts (ECMWF).

Here, we present a refined version of our model that predicts tercile probabilities for biweekly averaged temperature and accumulated precipitation for weeks 3 – 4 and 5 – 6. Our model is trained on limited-area patches that are sampled from global predictor fields. It uses anomalies of large-scale predictors and features derived from the target variable forecasts as inputs. Spatial probabilistic forecasts are obtained by estimating coefficient values for local, spatially smooth basis functions as outputs of the CNN. Our CNN model provides calibrated and skillful probabilistic predictions, and clearly improves over climatology and the respective ECMWF baseline forecast in terms of the ranked probability score for weeks 3 – 4 and 5 - 6.

Identifying relevant large-scale predictors for sub-seasonal precipitation forecast using explainable neural networks

1Mathematical Research Centre, 2ECMWF, 3Instituto de Ciencias del Mar (ICM-CSIC), 4Centre de Recerca Matemática (CRM), 5Institute for Marine Science

The last few years have seen an ever growing interest in weather predictions on sub-seasonal time scales ranging from 2 weeks to about 2 months. By forecasting aggregated weather statistics, such as weekly precipitation, it has indeed become possible to overcome the theoretical predictability limit of 2 weeks, bringing life to time scales which historically have been known as the “predictability desert”. The growing success at these time scales is largely due to the identification of weather and climate processes providing sub-seasonal predictability, such as the Madden-Julian Oscillation (MJO) and anomaly patterns of global sea surface temperature (SST), sea surface salinity, soil moisture and snow cover. Although much has been gained by these studies, a comprehensive analysis of potential predictors and their relative relevance to forecast sub-seasonal rainfall is still missing.

At the same time, data-driven machine learning (ML) models have proved to be excellent candidates to tackle two common challenges in weather forecasting: (i) resolving the non-linear relationships inherent to the chaotic climate system and (ii) handling the steadily growing amounts of Earth observational data. Not surprisingly, a variety of studies have already displayed the potential of ML models to improve the state-of-the-art dynamical weather prediction models currently in use for sub-seasonal predictions, in particular for temperatures, precipitation and the MJO. It seems therefore inevitable that the future of sub-seasonal prediction lies in the combination of both the dynamical, process-based and the statistical, data-driven approach.

In the advent of this new age of combined Neural Earth System Modeling, we want to provide insight and guidance for future studies (i) to what extent large-scale teleconnections on the sub-seasonal scale can be resolved by purely data-driven models and (ii) what the relative contributions of the individual large-scale predictors are to make a skillful forecast. To this end, we build neural networks to predict sub-seasonal precipitation based on a variety of large-scale predictors derived from oceanic, atmospheric and terrestrial sources. As a second step, we apply layer-wise relevance propagation to examine the relative importance of different climate modes and processes in skillful forecasts.

Preliminary results show that the skill of our data-driven ML approach is comparable to state-of-the-art dynamical models suggesting that current operational models are able to correctly model large-scale teleconnections within the climate system. The ML model achieves highest skills over the tropical Pacific, the Maritime Continent and the Caribbean Sea, in agreement with dynamical models. By investigating the relative importance of those large-scale predictors for skillful predictions, we find that the MJO and processes associated with SST anomalies like the El Niño-Southern Oscillation, the Pacific decadal oscillation and the Atlantic meridional mode all play an important role for individual regions along the tropics.

Ensemble forecast of the Madden Julian Oscillation using a stochastic weather generator based on analogs of Z500

11.ARIA Technologies, 8 Rue de la Ferme, 92100 Boulogne-Billancourt, France. & 2. Laboratoire des Sciences du Climat et de l’Environnement, UMR 8212 CEA-CNRS-UVSQ, IPSL \& Université Paris-Saclay, 91191 Gif-sur-Yvette, France., 2Laboratoire des Sciences du Climat et de l’Environnement, UMR 8212 CEA-CNRS-UVSQ, IPSL \& Université Paris-Saclay, 91191 Gif-sur-Yvette, France., 3Departament de Fisica, Universitat Politècnica de Catalunya, Edifici Gaia, Rambla Sant Nebridi 22, 08222 Terrassa, Barcelona, Spain.

Skillful forecast of the Madden Julian Oscillation (MJO) has an important scientific interest because the MJO represents one of the most important sources of sub-seasonal predictability. Proxies of the MJO can be derived from the first principal components of wind speed and outgoing longwave radiation (OLR) in the Tropics (RMM1 and RMM2). The challenge is to forecast these two indices. This study aims at providing ensemble forecasts MJO indices from analogs of the atmospheric circulation, mainly the geopotential at 500 hPa (Z500) by using a stochastic weather generator (SWG). The SWG is based on the random sampling of circulation analogs, which is a simple form of machine learning simulation. We generate an ensemble of 100 members for the amplitude and the RMMs for sub-seasonal lead times (from 2 to 4 weeks). Then we evaluate the skill of the ensemble forecast and the ensemble mean using respectively probabilistic and deterministic skill scores. We found that a reasonable forecast could reach 40 days for the different seasons. We compared our SWG forecast with other forecasts of the MJO.

Improving the prediction of the Madden-Julian Oscillation of the ECMWF model by post-processing

1Universitat politecnica de Catalunya, 2Researcher, 3Max Planck Institute for the Physics of Complex Systems, 4Universidad de la Republica, 5Universitat Politècnica de Catalunya

The Madden-Julian Oscillation (MJO) is a major source of predictability on the sub-seasonal (10- to 90-days) time scale.

An improved forecast of the MJO may have important socio-economic impacts due to the influence of MJO on both, tropical and extratropical weather extremes.

Although in the last decades' state-of-the-art climate models have proved their capability for forecasting the MJO exceeding the 5 weeks prediction skill, there is still room for improving the prediction.

In this study, we use Multiple Linear Regression and an Artificial Neural Network as post-processing methods to improve one of the currently best dynamical models developed by the European Centre for Medium-Range Weather Forecast (ECMWF).

We show that the post-processing with the machine learning algorithm employed leads to an improvement of the MJO prediction.

The largest improvement is in the prediction of the MJO geographical location and intensity.

Sub-Seasonal Probabilistic Precipitation Forecasting using Extreme Learning Machine

1Center for Earth System Modeling, Analysis, & Data (ESMAD), Department of Meteorology and Atmospheric Science, The Pennsylvania State University

Sub-Seasonal (S2S) climate forecasts suffer from a significant lack of prediction skill beyond week-two lead times. While the statistical bias correction of global coupled ocean-atmosphere circulation models (GCM) offers some measure of skill, there is significant interest in the capacity of machine learning-based (ML) forecasting approaches to improve S2S forecasts at these time scales. The large size of S2S datasets unfortunately makes traditional ML approaches computationally expensive, and therefore mostly inaccessible to those without access to institutional computing resources. In order to address this problem, Extreme Learning Machine (ELM) can be used as a fast alternative to traditional neural network-based ML forecasting approaches. ELM is a randomly initialized neural network approach, which, instead of adjusting hidden layer neuron weights through backpropagation, leaves them unchanged and solves its output layer with the generalized Moore-Penrose inverse.

As our submission to the recently concluded WMO sub-seasonal-to-seasonal prediction AI/ML Challenge, we designed a high-performance Probabilistic Output ELM (PO-ELM) implementation for the generation of gridpoint-wise S2S scale probabilistic forecasts. The traditional PO-ELM approach modifies ELM to produce binary probabilistic output on the interval (0,1) by solving a sigmoid objective function in the output layer with modified linear programming. In order to accommodate probabilistic forecasting, we further modified PO-ELM in this case to produce relative tercile probabilities. We adopted a rule applying Normalization when PO-ELM output probabilities exceed one, and the SoftMax function otherwise. We used XCast, a Python library previously designed by the authors, to implement multivariate PO-ELM-based probabilistic forecasts of precipitation using the outputs of ECMWF’s S2S forecast. Here, we describe the motivation, co-design, and implementation of PO-ELM for S2S climate prediction, and discuss skill and interpretability of the resulting forecasts.

Opportunistic mixture model for post-processing S2S temperature and precipitation forecasts using convolutional neural networks

1Computer Research Institute of Montreal, 2Environment and Climate Change Canada

Forecasts on subseasonal-to-seasonal (S2S) timescales are challenging when compared to weather (0-2 weeks) and seasonal timescales (1-12 months). Whereas weather forecasts are strongly determined by the evolution of initial weather conditions, and seasonal forecasts by slowly varying boundary conditions, the quality of S2S forecasts relies on a combination of these factors in a complicated way.

Major Operational Forecasting Centers have traditionally adopted similar strategies to improve forecast skill at S2S timescale, such as: increasing physically-based model complexity, adopting an ensemble approach, and improving statistical post-processing. Despite this, the use of climatology at the 3-6 week projection time-window is still difficult to beat, especially for precipitation. With the recent advances in machine learning, there is hope that this predictability gap could be filled by novel post-processing methods that take advantage of the current Operational Forecast Centers data output.

We present an opportunistic mixture model which has these building block as input:

1) traditional statistical Ensemble Model Output Statistics (EMOS) applied to the ECMWF, NCEP and ECCC forecast model output,

2) a Convolutional Neural network applied on ECMWF model output,

3) climatology.

As such, our approach can be qualified as a multi-model ensemble. To optimally blend these different forecasts, we have developed a CNN-based blending model which is trained to produce the best 2-m temperature and precipitation for weeks 3-4 and 5-6 worldwide. Over the 2020 test period, our approach shows significant improvement over individual dynamical model forecasts, as well as over climatology.

In addition to our main results, details concerning our architecture as well as key features, such as those used for diminishing the risk of overfitting, will be presented. An analysis of the behavior of the blending model throughout the year will also be presented.

Our contribution was originally developed for the 2021 WMO S2S AI/ML Challenge.

Deep learning augmented numerical weather prediction digital twin experiments for global precipitation forecasting

1The University of Texas at Austin

Accurate precipitation predictions are considered a benchmark for numerical weather prediction (NWP) models. Precipitation simulation is a complex interaction of several dynamical and thermodynamical processes across multiple scales. As a result, precipitation bias and uncertainty is inherent in various operational weather predictions. A number of efforts are underway to develop rapid and accurate systems that can yield precipitation forecasts. These efforts are within the context of different hazard vulnerability mapping, agricultural, and hydrological applications, and also as boundary conditions for more limited domain model simulations. Here, we present a simplified NWP Digital Twin (DT) with modified ‘Deep Learning Weather Prediction- Cubed Sphere (DLWP-CS architecture that is based on the UNET and operates as a deep convolutional neural network (CNN) with residual learning. Experiments with the DT serve as a proof-of-concept to augment global data-driven precipitation models. The framework utilizes UNET which is a deep learning-based model that can conduct image-to-image regression. In this study, we present results from training of two deep learning-based models that conduct one-to-one mapping from surface air temperature and total cloud cover to target (predict) precipitation. The experiments are conducted using the widely used ERA-5 (0.25 deg, 1 hourly) reanalysis. The choice of the mapping variables builds on the prior understanding of the processes associated with the dynamical connections between clouds and surface air temperatures as a pathway through various feedbacks leading to convection, and dynamical exchanges across land and ocean ultimately resulting in precipitation outcome. The 0.25 deg global latitude-longitude gridded ERA5 data is first transformed to cubed sphere projections. Model training was achieved using hourly datasets from 1981 to 2011. The test prediction experiments are conducted as daily cumulative values (due to the temporal resolution of output available to us), for the testing period which runs from 2012 to 2015.When the results of the experiments are compared to those of an operational dynamical model, it is found that the model trained using surface temperature as a predictor performed the best. Benchmarking to operational predictions from the GFS T1534, the DT output is equivalent to a doubling of the grid points (finer resolution) and an increase in area averaged skill as evaluated by Pearson correlation coefficients. Our proof-of-concept research shows that residual learning-based UNET can extract physical links from a simple scalar such as surface temperature to target an exceptionally interlinked output such as precipitation. This promising outcome leads to higher confidence to develop parsimonious linkages with DT in dynamical operational models that can produce higher spatial resolution, possible more rapid, high fidelity probabilistic precipitation outcome. Additional experiments with combination of cloud and surface (air) temperature also yield modest improvements highlighting the extendibility of the results.

Forecasting Global Weather with Graph Neural Networks

We present a data-driven approach for forecasting global weather using graph neural networks. The system learns to step forward the current 3D atmospheric state by six hours, and multiple steps are chained together to produce skillful forecasts going out several days into the future. The underlying model is trained on reanalysis data from ERA5 or forecast data from GFS. Test performance on metrics such as Z500 (geopotential height) and T850 (temperature) improves upon previous data-driven approaches and is comparable to operational, full-resolution, physical models from GFS and ECMWF, at least when evaluated on 1-degree scales and when using reanalysis initial conditions. We also show results from connecting this data-driven model to live, operational forecasts from GFS.

Deep learning weather prediction: epistemology and new scientific horizons

1University of Washington, Seattle, USA, 2Atmospheric Sciences, U of Washington, 3Microsoft

We examine cases in which a machine learning model trained using re-analysis data with convolutional neural networks (CNNs) learns atmospheric dynamics in a nontraditional framework.

We compare the performance of an ensemble-weather-prediction system based on a global deep-learning weather-prediction (DLWP) model with reanalysis data and forecasts from the European Center for Medium Range Weather Forecasts (ECMWF) ensemble for sub-seasonal weather prediction.

The model is trained on a cubed-sphere grid using a loss function that minimizes forecast error over a single 24-hour period. The model predicts seven 2D shells of atmospheric data on roughly 150x150 km grids with a quasi-uniform global coverage. Notably, our model can be iterated forward indefinitely to produce forecasts at 6-hour temporal resolution for any lead time. We present case studies showing the extent to which the model is able to learn “model physics” to forecast two-meter temperature and diagnose precipitation. Sources of ensemble spread and the performance of the ensemble are discussed relative to the ECMWF S2S ensemble forecasts.

We discuss the new doors for scientific investigation that would be opened by a reliable, well performing DLWP model.

A Machine Learning Approach to Stochastic Downscaling of Precipitation Forecasts

1AOPP at Oxford University, 2University of Oxford, 3ECMWF, 4University Of Oxford

Despite continuous improvements, precipitation forecasts are still not as accurate and reliable as those of other meteorological variables as several key processes affecting precipitation distribution and intensity occur below the resolved scale of global weather models. Generative adversarial networks (GANs) have been demonstrated by the computer vision community to be successful at super-resolution problems, i.e., learning to add structure to coarse images. Leinonen et al.~(2020) previously applied a GAN to produce ensembles of reconstructed high-resolution atmospheric fields, given coarsened input data. In this paper, we demonstrate this approach can be extended to the more challenging problem of increasing the accuracy and resolution of low-resolution input from a weather forecasting model in order to better match high-resolution `truth' radar measurements. The neural network must hence learn to add resolution and structure while accounting for forecast error. We show that GANs and VAE-GANs can match the statistical properties of state-of-the-art pointwise post-processing methods whilst creating high-resolution, spatially coherent precipitation maps.

Causal deep learning for studying the Earth system: soil moisture-precipitation coupling in ERA5 data across Europe

1Forschungszentrum Jülich, 2Institute of Bio- and Geosciences, Agrosphere (IBG-3), Forschungszentrum Jülich, 3Fraunhofer Center for Machine Learning and Fraunhofer SCAI

The Earth system is a complex non-linear dynamical system. Despite many years of research, sophisticated numerical models and a plethora of observational data, many processes and relations between variables are still poorly understood. Current approaches for studying relations in the Earth system may be broadly divided into approaches based on numerical simulations and statistical approaches. However, there are several inherent limitations to current approaches that are, for example, high computational costs, reliance on the correct representation of relations in numerical models, strong assumptions related to linearity or locality, and the fallacy of correlation and causality.

Here, we propose a novel methodology combining deep learning (DL) and principles of causality research in an attempt to overcome these limitations. The methodology extends the recent idea of training and analyzing DL models to gain new scientific insights in the relations between input and target variables by combining this idea with a theorem from causality research. This theorem states that a statistical model may learn the causal impact of an input variable on a target variable if suitable additional input variables are chosen. As an illustrative example, we apply the methodology to study soil moisture-precipitation coupling in ERA5 climate reanalysis data across Europe. We demonstrate that, harnessing the great power and flexibility of DL models, the proposed methodology may yield new scientific insights into complex, nonlinear and non-local coupling mechanisms in the Earth system.

Rainfall scenarios from AROME-EPS forecasts using autoencoder and climatological patterns

1Meteo France, 2Météo-France

The use of ensemble prediction systems (EPS) is challenging because of the huge amont of information that it provides. Forecasts from ensemble prediction systems (EPS) are often summarised by statistical quantities (ie quantiles maps). Although such mathematical representation is efficient for capturing the ensemble distribution, it lacks physical consistency, which raises issues for many applications of EPS in an operational context. In order to provide a physically-consistent synthesis of the French convection-permitting AROME-EPS forecasts, we propose to automatically draw a few scenarios that are representative of the different possible outcomes. Each scenario is a reduced set of EPS members.

A first step aims at extracting relevant features in each EPS member in order to reduce the problem dimensionality. Then, each EPS member is assigned to a climatological pattern using these relevant features. The EPS members in the same climatological pattern form a scenario.

The originality of our work is to leverage the capacities of deep learning for the features extraction. For that purpose, we use a convolutional autoencodeur (CAE) to learn an optimal low-dimensional representation (also called latent space representation) of the input forecast field. In this work, the algorithm is developed to work on 1h-accumulated rainfall from AROME-EPS. The CAE is trained on a 5-year dataset of AROME-EPS members. The resulting archive of latent space states is then used to define the climatological patterns using a standard Kmeans clustering method. After the CAE training and the clustering, a synthesis plot of rainfall scenarios is designed to create a first real-time production in a research mode.

A concrete example of rainfall scenarios in September 2021 is proposed to illustrate this new post-processing method. The workflow from AROME-EPS outputs to rainfall scenarios is also presented.

Utilizing self-learning capability of a deep neural network and continuous monitoring of geostationary satellite to understand clouds structure and organization.

1Institute for Geophysics and Meteorology,University of Cologne, 2Department Remote Sensing of Atmospheric Processes, Leibniz Institute for Tropospheric Research

The resolution of geostationary satellites is continuously improving, enabling new insights into clouds' complex structure and organization. High-resolution spatio-temporal structures of clouds are major challenges for numerical weather prediction. An important question is whether high-resolution models can realistically produce reality.

Our work aims to understand the structure and organization of cloud systems by exploiting the self-learning capability of a deep neural network and using high-resolution cloud optical depth images. The neural network utilizes deep clustering and non-parametric instance-level discrimination for decision-making at any learning stage. The data augmentation in the data pipeline, multi-clustering of the dense vectors, and Multilayer perceptron projection at the end of CNN help the network learn a better representation of images.

Unlike most studies, our neural network is trained over the central European domain, characterized by strong land surface type and topography variations. The satellite data is post-processed and retrieved at a higher spatio-temporal resolution(2 km,5 min), equivalent to the future Meteosat Third Generation satellite.

We show how recent advances in deep learning networks are used to understand the cloud's physical properties in temporal and spatial scales. We avoid the noise and bias from human labeling in a purely data-driven approach. We demonstrate explainable artificial intelligence (XAI), which helps gain trust for the neural network's performance. We visualize the cloud organization's different regions that correspond to any decision of interest by the neural network. We use K-nearest neighbors to find similar cloud structures at different time scales.

A thorough quantified evaluation is done on two spatial domains and two-pixel configurations (128x128,64x64). We examine the uncertainty associated with distinct machine-detected cloud-pattern categories using an independent hierarchal-agglomerative algorithm. Therefore the work also explores the uncertainties related to the automatic machine-detected patterns and how they vary with different cloud classification types.

ML-based fire hazard model trained on thermal infrared satellite data

1Ororatech

Forest fires are an increasing problem and have become more frequent and destructive in recent years. As a result of climate change, environmental parameters associated with forest fires are becoming increasingly unstable, such as an increase in the number of consecutive hot days, which makes risk assessment difficult. Reliable hazard models are needed so that forest owners can deploy resources in vulnerable areas, the government can plan mitigation strategies, and insurance companies can offer fair pricing.

We built a wildfire hazard model for Australia that infers fire susceptibility based on environmental conditions that led to fire in the past. It therefore learns from actual fire conditions. The problem is set up as a pixel wise classification problem, with the machine learning model predicting the daily fire hazard per gridcell (0.1°), based on multivariate time series input of the preceding 5 days.

The Training Dataset contains selected ECMWF ERA5 Land variables, ESA CCI land cover, seasonal encoding as well as positional encoding. The ground truth label dataset is derived from fusing thermal infrared data of 20 different satellites into one active fire dataset. The classification problem itself is highly imbalanced, with non-fire pixels making up 99.78% of the training data. We evaluate the ability of the model to correctly classify fire using F1 Score and compare the results against selected standard fire hazard models.

Compared to classical fire hazard models, this approach allows us to account for regional and untypical fire conditions. We also show that the model can be used on weather forecast data, allowing us to classify fire risk up to 14 days ahead.

Photographic Visualization of Weather Forecasts with Generative Adversarial Networks

1MeteoSwiss, 2Comerge, 3Friedrich-Alexander-Universität Erlangen-Nürnberg, 4ETH Zürich

Webcam images are an information-dense yet accessible visualization of past and present weather conditions, and are consulted by meteorologists and the general public alike. Weather forecasts, however, are still communicated as text, pictograms or charts, making it necessary to switch the visualization format when moving from observation to prediction. We are therefore exploring how photographic images can be used to visualize future weather conditions as well.

Photographic visualizations of weather forecasts should satisfy the following criteria: The images should look real and be free of obvious artifacts. They should match the predicted atmospheric, ground and illumination conditions in the view of the camera. The transition from observation to forecast should be seamless, and there should be visual continuity between images for consecutive lead times.

An image retrieval system could satisfy the first two criteria using a database of annotated images, but the third and fourth criterion would require a prohibitively large archive. We therefore use conditional Generative Adversarial Networks to synthesize new images: a generator network to transform the present image into a future image consistent with the forecast, and a discriminator network to judge whether a given image is real or synthesized. Training the two networks against each other results in visualization method that scores well on all evaluation criteria.

We present results for three cameras, one on the Swiss plateau, one on a mountain pass and one in a valley south of the Alps. We show that in many cases, the generated images are indistinguishable from real images and match the COSMO-1 forecast. Nowcasting sequences of generated images are seamless and show visual continuity, too.

We conclude by discussing current limitations of the visualization method, and the work that is still required for it to become an operational forecast product.

Identifying Lightning Processes in ERA5 Soundings with Deep Learning

1Department of Mathematics, University of Innsbruck, 2University Innsbruck

Atmospheric environments favorable for convection are commonly represented by proxies such as CAPE, CIN, wind shears and charge separation. These proxies are available in various modifications to adapt to regional characteristics of convection. Hourly reanalysis data with high vertical resolution of cloud physics open possibilities for identifying even more tailored proxies.

We examine the abilities of deep learning to identify vertical profiles favorable for convection. This includes adaption to regional characteristics in complex terrains. The domain of interest covers the lightning hot spot of the European Eastern Alps. The study focuses on summers 2010-2019.

We train a deep neural net linking ERA5 vertial profiles of cloud physics, mass field variables and winds to lightning location data from the Austrian Lightning Detection & Information System (ALDIS), which has been transformed to a binary target variable labelling the ERA5 cells as 'lightning' and 'no lightning'. The ERA5 parameters are taken on model levels up to the tropopause forming an input layer of 671 features. Each of three hidden layers consist of 256 neurons. Weighted cross-entropy serves as loss to account for the imbalance of the target classes. The data of 2019 serves as test period. The remaining data (2010-2018) are split into 80% training and 20% validation.

On independent test data the net partly outperforms a reference with features based on meteorological expertise. SHAPley values highlight the atmospheric processes learnt by the net. Preliminary results show that the net identifies cloud ice and snow content in the upper and mid troposphere as relevant features.

Deep Learning for the Verification of Synoptic-scale Processes in NWP and Climate Models

1Karlsruhe Institute of Technology, 2IMK-TRO, Karlsruhe Institute of Technology (KIT)

Physical processes on the synoptic scale are important modulators of the large-scale extratropical circulation. In particular, rapidly ascending air streams in extratropical cyclones, so-called warm conveyor belts (WCBs), have a major impact on the Rossby wave pattern and are sources and magnifiers of forecast uncertainty. Thus, an adequate representation of WCBs is desirable in NWP and climate models. Most often, WCBs are defined as Lagrangian trajectories that ascend in two days from the lower to the upper troposphere. The calculation of trajectories is computationally expensive and requires data at high spatio-temporal resolution so that systematic evaluations of the representation of WCBs in NWP and climate models are missing. In this study, we present a novel framework that aims to predict the inflow, ascent, and outflow phases of WCBs from instantaneous gridded fields. A UNet-type Convolutional Neural Network (CNN) is trained using a combination of meteorological parameters as predictors. Validation against a Lagrangian-based dataset confirms that the CNN model reliably replicates the climatological frequency of WCBs as well as their footprints at instantaneous time steps. With its comparably low computational costs we propose that the new diagnostic may be applied to systematically verify WCBs in large datasets such as ensemble reforecast or climate model projections. Our diagnostic demonstrates how deep learning methods may be used to advance our fundamental understanding of synoptic-scale processes that are involved in forecast uncertainty and systematic biases in NWP and climate models.

Latent space, feature space and the global domain – how ozone research can benefit from explainable machine learning

1Jülich Supercomputing Centre

Machine learning approaches are widely applied nowadays to predict the spatial variability of tropospheric ozone. In this presentation, we show how the reliability and generalizability of machine learning models for ozone prediction can be evaluated by analyzing their latent spaces and feature spaces. This allows us to gain new insights across the global domain.

As a toxic trace gas and a short-lived climate forcer, tropospheric ozone exhibits high spatial variability that depends on many environmental factors. We use AQ-Bench, a benchmark dataset that links a multitude of geospatial datasets to annual mean ozone statistics, to train different machine learning models for ozone prediction. A closer look at the latent space and the feature space of the models allows us to explain uncertainties in the predictions. Based on this analysis, we point out gaps in the training data and assess the generalizability of the models. Finally, transfer our findings back to the global geographic domain by, for example, proposing new building locations for quality monitoring stations, or by mapping ozone globally where we are confident that the models generalize. This is an example of how explainable machine learning is creating new insights for ozone research.

Learning from Noisy Class Labels for Earth Observation

1TU Berlin

With the advances in satellite technology, Earth observation (EO) data archives are continuously growing with high speed, delivering an unprecedented amount of data on the state of our planet and changes occurring on it. The two tasks common to most of the EO applications are: i) land-use land-cover (LULC) maps generation and updating; and ii) automatic scene understanding based on the LULC classes. In recent years, deep learning (DL) approaches have attracted great attention to realize these tasks. Most DL models require a high number of labeled samples during training to optimize all parameters and reach a high performance. The availability and quality of such data determine the feasibility of many DL models. This is mainly due to the fact that LULC classes are related to physical phenomena characterized by specific properties (spatial variability of classes, non-stationary spectral signatures, etc.) that are very difficult to be modelled without reliable labelled data. However, the collection of a sufficient number of reliable samples is time-consuming, complex and costly in operational scenarios and can significantly affect the final accuracy of the DL methods. A widely accepted approach to address this problem is to exploit the publicly available thematic products (e.g., Corine Land Cover [CLC] products) as labelling sources with zero-annotation effort. Constructing such large-scale training sets with zero-labelling cost is highly valuable. However, the set of LULC class labels available through these products can be noisy (wrong, incomplete, outdated, etc.), and thus their direct use results in an uncertainty in the DL models and thus uncertainty in the LULC maps/scene predictions. In this talk, our recent developments to reduce the negative impact of noisy LULC class annotations in satellite image analysis problems will be introduced. In particular, we will present our noise robust collaborative learning method (RCML) that alleviates the adverse effects of label noise during the training phase of a Convolutional Neural Network model. RCML identifies, ranks and excludes noisy labels assigned to the images based on three main modules: 1) discrepancy module; 2) group lasso module; and 3) swap module. The discrepancy module ensures that the two networks learn diverse features, while producing the same predictions. The task of the group lasso module is to detect the potentially noisy labels assigned to the training images, while the swap module task is devoted to exchanging the ranking information between two networks. The experiments conducted on two satellite image archives confirm the robustness of the RCML under extreme label noise rates. Our code is publicly available online: http://www.noisy-labels-in-rs.org.

Generative Adversarial Networks for Extreme Super-Resolution and Downscaling of Wind Fields at Convection-Permitting Scales

1University of Victoria, 2Environment and Climate Change Canada

High-resolution (HR) regional climate models -- such as convection-permitting models (CPMs) -- can simulate meteorological phenomena better than coarse-resolution (CR) climate models, especially for variables strongly dependent on topography. However, due to their immense computational cost, CPM simulations are typically short (e.g., 1-2 decades) and cover limited spatial domains (e.g., regional to continental).

New machine learning (ML) algorithms -- in particular, those developed in computer vision for image super-resolution (SR) -- have begun to be used to downscale CR climate model outputs to the HR scales of observationally-constrained datasets. This work explores generative adversarial network’s (GANs) ability to emulate HR simulations using CR model fields as inputs and considers the following topics:

(i) Many applications of GANs for downscaling consider input fields that are the HR fields coarsened by a simple function. Practical applications of GANs for downscaling may not have such strong consistency between the CR and HR datasets -- for example, a CPM is free to develop its own climatology and may diverge from its driving model inside the domain. Here, reanalysis fields are directly used to reconstruct the CPM and no coarsening function is used.

(ii) Few studies use recent, more stable GAN frameworks. Stability becomes increasingly relevant as researchers both aim to overcome large resolution gaps between CR and HR models, and also customize their GANs with physics-informed model architectures and regularization terms. Here, a Wasserstein GAN with Gradient Penalty (WGAN-GP) was adopted and shown to be stable and generalize well to unseen data despite the high SR scaling factor.

(iii) A novel frequency separation scheme was adopted from the computer vision literature to separate spatial scales in wind patterns. Low and high frequency spatial components were delegated to separate terms in the loss function. Several GANs were trained over three geographically separate regions to test this concept.

A machine learning correction model for the warm bias over Arctic sea ice in atmospheric reanalyses

1Alfred-Wegener-Institut für Polar- und Meeresforschung, 2ECMWF, 3NCAR

Atmospheric reanalyzes are widely used as an estimate for the past atmospheric near-surface state over the sea ice, providing crucial boundary conditions for sea ice and ocean simulations. Previous research revealed the existence of large near-surface (mostly warm) temperature biases over the Arctic sea ice for most atmospheric reanalyzes, a fact that compromises the employment of these products in support of sea ice research, and that is linked to a poor representation of the snow over the sea ice in the forecast models used to produce the reanalysis. Here, we train a fully connected neural network that learns from remote sensing infrared observations to correct the existing generation of reanalyzes, including the ECMWF developed ERA5, based on a set of sea ice and atmospheric predictors, which are themselves reanalysis products. The ML correction model proved to be skillful in reducing the model bias, particularly in the Arctic regions experiencing clear sky conditions and therefore a strong radiative cooling of the ice (or snow) surface. The impact of the correction on uncoupled sea ice and ocean simulations is quantified and compared to the effect of tuning key thermodynamic parameters of the sea ice model.

Spatially coherent postprocessing of cloud cover and precipitation forecasts using generative adversarial networks

1University of Zurich, 2ETH Zurich, 3MeteoSwiss

The generation of physically realistic postprocessed forecast scenarios calls for an appropriate representation of the spatiotemporal and intervariable dependence structure on top of providing well-calibrated marginal forecast distributions. Standard statistical postprocessing approaches like ensemble model output statistics do not account for the dependence structure. Therefore, an additional postprocessing step is required that reintroduces the dependence structure of a template forecast, e.g. the raw ensemble in the case of ensemble copula coupling (ECC). However, the advent of machine learning based postprocessing methods allows us to perform both steps simultaneously.

Here, we focus on applying conditional generative adversarial networks (cGAN) to generate spatially realistic forecast scenarios. First, we compare different postprocessing approaches for hourly ensemble cloud cover predictions over Switzerland based on cGAN, ensemble model output statistics, and dense neural networks (dense NN). Using output of the high-resolution (2 km) limited area numerical weather model COSMO-E and the global ECMWF-IFS as raw ensemble predictors and EUMETSAT satellite data for verification, cGAN proved to generate realistic forecast scenario maps with univariate skill only slightly worse than the skill of dense NN and clearly better than direct model output (DMO). Secondly, we also consider cGAN-based postprocessing of daily COSMO-E precipitation over Switzerland. While cGAN scenario maps of daily precipitation look realistic from visual inspection, up to now, we have not been able to increase forecast skill compared to DMO. Possible reasons will be discussed.

Improvements of the Adriatic Deep-Learning Sea Level Modeling Network HIDRA

1Faculty of Computer and Information Science, Visual Cognitive Systems Lab, University of Ljubljana, Ljubljana, Slovenia, 2Slovenian Environment Agency, Group for Meteorological, Hydrological and Oceanographic Modelling, Ljubljana, Slovenia

We report on several improvements within existing Adriatic deep-learning sea level and storm surge modeling architecture HIDRA1.0 [Žust et al., Geosci. Model Dev., 2021], currently operational at Slovenian Environment Agency. Entire modeling framework was migrated from TensorFlow to PyTorch.

We re-examined the influence of the atmospheric encoder, which was originally based on Resnet20. Tests with alternatives Resnet10, Resnet18, Resnet34 and Resnet101 revealed that Resnet18 provides improvements at a reduced number of parameters. Analysis of weight initialization in temporal and spatial attention blocks showed that, compared to initial random normal initialization, the Xavier normal initialization improves convergence time without compromising skill. Interestingly, removing the dropout blocks, which is usually placed to reduce overfitting, leads to significant accuracy boost on the test set. Compared to the initial architecture these changes led to a total improvement in MAE by 10% and in RMSE by 8%. Network architecture was further modified to accommodate training and simultaneous prediction for multiple locations (most notably Venice, Grado and Ancona). We explore extending the atmospheric input with daily L3 scatterometer surface wind measurements to gauge ensemble wind field errors. Several other state-of-the-art improvements to remaining issues will be proposed.

Spatio-temporal Forecasting of Meteorological Visibility over Northwest of Morocco using Long short-term memory (LSTM) network.

1Direction Général de le Météorologie (DGM), Morocco, 2Direction Générale de la Météorologie, CNRM, 3 Hassania School of Public Works,z LaGeS/ MoNum

Degradation of visibility due to foggy weather conditions is a common trigger for air traffic delays. However, in weather forecasting, spatio-temporal data have inherent dependencies that vary in space and have repercussions over time. As weather prediction models embody several aspects of uncertainty that are spatio-temporally propagated. This work proposes a way to trace this error propagation through deep learning (DL) algorithms by comparing three use cases where DL-model inputs are either observations, raw or unbiased past weather forecasts. Long short-term memory (LSTM) network is the deep structure of Recurrent neural network (RNN) used in this study. Due to its special hidden layer unit structure, it can preserve the trend information contained in the long-term sequences and improve performance.